Gametrak Instrument Controller

A musical instrument rises from the ashes of an obsolete game controller.

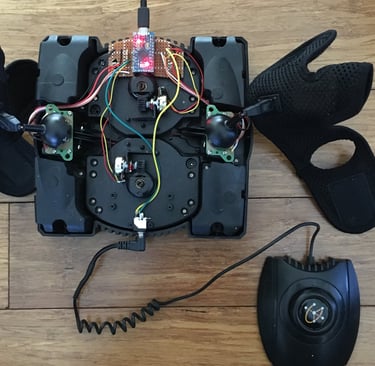

The Gametrak is a game controller released in the early 2000s for the Playstation 2. Like many other input devices, it features two analog joysticks each comprised of two potentiometers at right angles to each other (X and Y axes). Unlike other devices however, the Gametrak features a third potentiometer on each joystick connected to a spool of nylon(?) filament that can be extended into the Z axis by attaching to gloves worn by the user.

This unique control structure (originally created for golf and boxing simulators on the PS2) makes the Gametrak an excellent candidate for gestural control in musical application, and despite being long discontinued it has found a second life in the realm of NIMEs and interface-based composition. I first encountered the device as a musical controller while watching a performance by the Stanford Laptop Orchestra (SLoRK) in which an ensemble of Gametraks are used to perform a collaborative soundscape, and I was immediate hooked.

I wanted to get my hands on one of my own, and while they are no longer manufactured can still be found on Ebay for a reasonable price. Generally, these units communicate using the Sony’s proprietary protocol but are also able to use the HID (Human Interface Device) protocol as well, though one must remove and resolder a resistor on the internal PCB. HID is universally recognized by operating systems and most importantly can be picked up as a data stream inside of programming environments like Max/MSP or Pure Data with very little hardware hacking necessary.

However, it would appear that there are at least two revisions of the control PCB in the device out there with no distinguishing features on the outside of the device to help tell them apart. As such, the version I purchased was (I believe) an earlier revision did not contain the HID functionality, rendering it almost useless for my purposes.

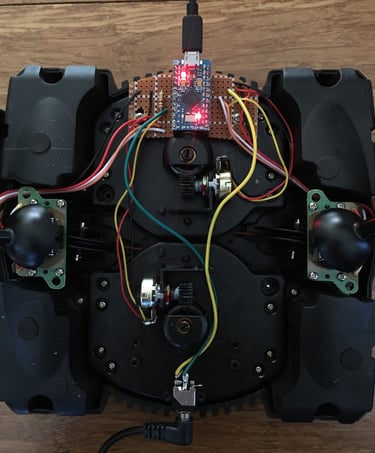

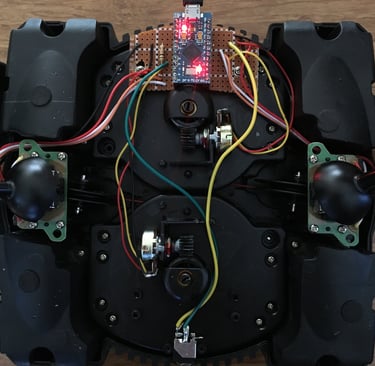

But, feeling impatient and eager to use this device I decided to give it a bit of an upgrade and replace the internal control board with my own microcontroller. Several Arduino models (though not all) can be recognized as HID or MIDI devices by most operating systems, including the Pro Micro that I chose for this particular application. Fortunately, the analog potentiometers used in the Gametrak’s joysticks were very easy to wire to Analog pins on the Arduino, with one extra 3.5mm jack wired to a Digital pin for the detachable footswitch. The Arduino sketch is very simple, simply reading the analog voltages from the 6 potentiometers (3 per hand) + footswitch and converting them into HID streams. It would also be very simple to turn this device into a USB MIDI controller simply by altering the Arduino script if one wanted to use it inside a DAW or standalone software synth.

To test the controller, I decided to follow in other’s footsteps and try out the ~blotar object in Max/MSP, a physical-modeling synth hybrid of a flute and electric guitar. Simply mapping the 6 outputs of the Gametrak to individual parameters in ~blotar was very fun to play around with, but I found the one-to-one mapping to be a little difficult to control and I was unable to manipulate the other available parameters inside of the synth with only 6 control streams. To make better use of the instrument and make the controller more gestural in its mapping, I decided to try feeding the inputs of the Gametrak into a neural network. Fortunately Benjamin Day Smith has a collection of Machine-Learning-focused externals for Max/MSP including ml.mlp, a very simple-to-use neural network that works perfectly for multiplexing control streams in this manner. By recording the outputs of the Gametrak in various positions (arms outstretched, arms down, one arm up, etc.) and matching them with a parameter “presets” in ~blotar, a small training dataset was produced and fed to ml.mlp.

The result was striking. Suddenly the Gametrak began to feel like a real instrument, with immediate auditory feedback to the performer and complex, predictable results tied to certain movements. Instead of a collection of 6 axes, I felt that I could explore a 3D space of sounds where each movement of my hands produced a change in timbre that would be difficult or impossible to produce by controlling each parameter individually with a separate axis. Very cool!

As a musical interface, the Gametrak has several things going for it. For one, the gestural nature of the performer’s interactions with it lend themselves very well to a clear sense of causality for the audience; that is, it is very easy to perceive the connection between the performer’s motion and a change in sound. It is also very easy to use with a variety of sound-producing algorithms, with many models requiring very little hardware hacking. Even for those that do the process is very straightforward for anyone with a little Arduino and soldering experience.

On the other hand, its design does present some challenges. As its control axes are continuous and provide no inherent positional feedback to the user, it can be difficult to precisely control parameters like pitch using a direct or indirect mapping. This is a similar issue to that presented by other electronic instruments like the theremin, and can give the instrument a very steep learning curve if not addressed by some intermediary step in the mapping process (quantization, non-linear mapping, etc.). Or not addressing it at all, as I chose to in this experiment!